Elasticsearch has long been used for a wide variety of real-time analytics use cases, including log storage and analysis and search applications. The reason it’s so popular is because of how it indexes data so it’s efficient for search. However, this comes with a cost in that joining documents is less efficient.

There are ways to build relationships in Elasticsearch documents, most common are: nested objects, parent-child joins, and application side joins. Each of these has different use cases and drawbacks versus the natural SQL joining approach that is provided by technologies like Rockset.

In this post, I’ll talk through a common Elasticsearch and Rockset use case, walk through how you could implement it with application-side joins in Elasticsearch, and then show how the same functionality is provided in Rockset.

Use Case: Online Marketplace

Elasticsearch would be a great tool to use for an online marketplace as the most common way to find products is via search. Vendors upload products along with product info and descriptions that all need to be indexed so users can find them using the search capability on the website.

This is a common use case for a tool like Elasticsearch as it would provide fast search results across not only product names but descriptions too, helping to return the most relevant results.

Users searching for products will not only want the most relevant results displayed at the top but the most relevant with the best reviews or most purchases. We will also need to store this data in Elasticsearch. This means we will have 3 types of data:

- product – all metadata about a product including its name, description, price, category, and image

- purchase – a log of all purchases of a specific product, including date and time of purchase, user id, and quantity

- review – customer reviews against a specific product including a star rating and full-text review

In this post, I won’t be showing you how to get this data into Elasticsearch, only how to use it. Whether you have each of these types of data in one index or separate doesn’t matter as we will be accessing them separately and joining them within our application.

Building with Elasticsearch

In Elasticsearch I have three indexes, one for each of the data types: product, purchase, and review. What we want to build is an application that allows you to search for a product and order the results by most purchases or best review scores.

To do this we will need to build three separate queries.

- Find relevant products based on search terms

- Count the number of purchases for each returned product

- Average the star rating for each returned product

These three queries will be executed and the data joined together within the application, before returning it to the front end to display the results. This is because Elasticsearch doesn’t natively support SQL like joins.

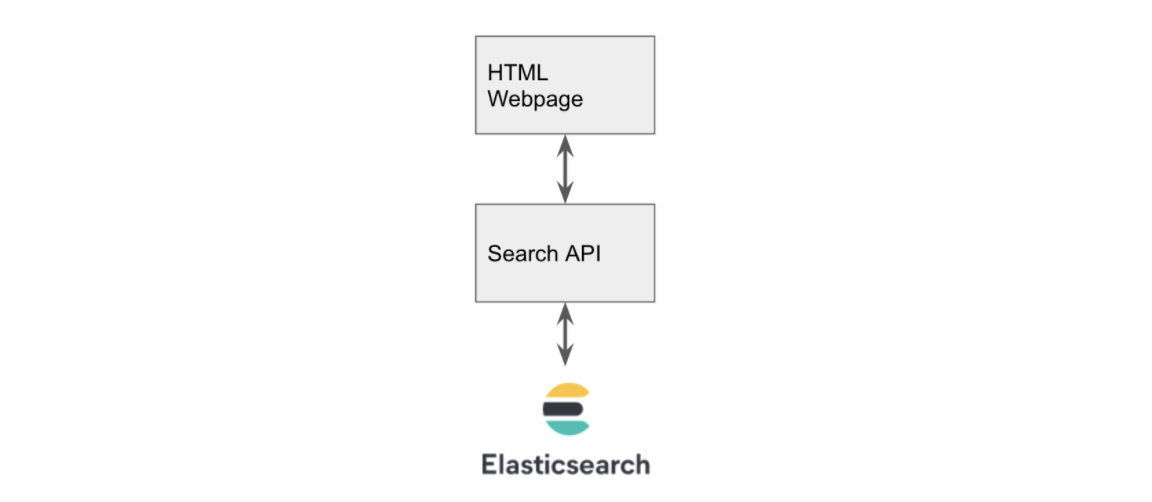

To do this, I’ve built a simple search page using Vue and used Axios to make calls to my API. The API I have built is a simple node express API that is a wrapper around the Elasticsearch API. This will allow the front end to pass in the search terms and have the API execute the 3 queries and perform the join before sending the data back to the front end.

This is an important design consideration when building an application on top of Elasticsearch, especially when application-side joins are required. You don’t want the client to join data together locally on a user’s machine so a server-side application is required to take care of this.

The application architecture is shown in Fig 1.

Fig 1. Application Architecture

Building the Front End

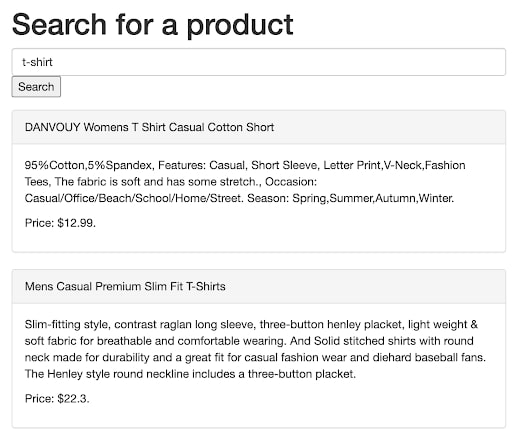

The front end consists of a simple search box and button. It displays each result in a box with the product name at the top and the description and price below. The important part is the script tag within this HTML file that sends the data to our API. The code is shown below.

<script>

new Vue({

el: "#app",

data: {

results: [],

query: "",

},

methods: {

// make request to our API passing in query string

search: function () {

axios

.get("http://127.0.0.1:3001/search?q=" + this.query)

.then((response) => {

this.results = response.data;

});

},

// this function is called on button press which calls search

submitBut: function () {

this.search();

},

},

});

</script>

It uses Axios to call our API that is running on port 3001. When the search button is clicked, it calls the /search endpoint and passes in the search string from the search box. The results are then displayed on the page as shown in Fig 2.

Fig 2. Example of the front end displaying results

For this to work, we need to build an API that calls Elasticsearch on our behalf. To do this we will be using NodeJS to build a simple Express API.

The API needs a /search endpoint that when called with the parameters ?q=<search term> it can perform a match request to Elasticsearch. There are many blog posts detailing how to build an Express API, I’ll concentrate on what is required on top of this to make calls to Elasticsearch.

Firstly we need to install and use the Elasticsearch NodeJS library to instantiate a client.

const elasticsearch = require("elasticsearch");

const client = new elasticsearch.Client({

hosts: ["http://localhost:9200"],

});

Then we need to define our search endpoint that uses this client to search for our products in Elasticsearch.

app.get("/search", function (req, res) {

// build the query we want to pass to ES

let body = {

size: 200,

from: 0,

query: {

bool: {

should: [

{ match: { title: req.query["q"] } },

{ match: { description: req.query["q"] } },

],

},

},

};

// tell ES to perform the search on the 'product' index and return the results

client

.search({ index: "product", body: body })

.then((results) => {

res.send(results.hits.hits);

})

.catch((err) => {

console.log(err);

res.send([]);

});

});

Note that in the query we are asking Elasticsearch to look for our search term in either the product title or description using the “should” keyword.

Once this API is up and running our front end should now be able to search for and display results from Elasticsearch as shown in Fig 2.

Counting the Number of Purchases

Now we need to get the number of purchases made for each of the returned products and join it to our product list. We’ll be doing this in the API by creating a simple function that calls Elasticsearch and counts the number of purchases for the returned product_id’s.

const getNumberPurchases = async (results) => {

const productIds = results.hits.hits.map((product) => product._id);

let body = {

size: 200,

from: 0,

query: {

bool: {

filter: [{ terms: { product_id: productIds } }],

},

},

aggs: {

group_by_product: {

terms: { field: "product_id" },

},

},

};

const purchases = await client

.search({ index: "purchase", body: body })

.then((results) => {

return results.aggregations.group_by_product.buckets;

});

return purchases;

};

To do this we search the purchase index and filter using a list of product_id’s that were returned from our initial search. We add an aggregation that groups by product_id using the terms keyword which by default returns a count.

Average Star Rating

We repeat the process for the average star rating but the payload we send to Elasticsearch is slightly different because this time we want an average instead of a count.

let body = {

size: 200,

from: 0,

query: {

bool: {

filter: [{ terms: { product_id: productIds } }],

},

},

aggs: {

group_by_product: {

terms: { field: "product_id" },

aggs: {

average_rating: { avg: { field: "rating" } },

},

},

},

};

To do this we add another aggs that calculates the average of the rating field. The rest of the code stays the same apart from the index name we pass into the search call, we want to use the review index for this.

Joining the Results

Now we have all our data being returned from Elasticsearch, we now need a way to join it all together so the number of purchases and the average rating can be processed alongside each of the products allowing us to sort by the most purchased or best rated.

First, we build a generic mapping function that creates a lookup. Each key of this object will be a product_id and its value will be an object that contains the number of purchases and the average rating.

const buildLookup = (map = {}, data, key, inputFieldname, outputFieldname) => {

const dataMap = map;

data.map((item) => {

if (!dataMap[item[key]]) {

dataMap[item[key]] = {};

}

dataMap[item[key]][outputFieldname] = item[inputFieldname];

});

return dataMap;

};

We call this twice, the first time passing in the purchases and the second time the ratings (along with the output of the first call).

const pMap = buildLookup({},purchases, 'key', 'doc_count', 'number_purchases')

const rMap = buildLookup(pMap,ratings, 'key', 'average_rating', 'average_rating')

This returns an object that looks as follows:

{

'2': { number_purchases: 57, average_rating: 2.8461538461538463 },

'20': { number_purchases: 45, average_rating: 2.7586206896551726 }

}

There are two products here, product_id 2 and 20. Each of them has a number of purchases and an average rating. We can now use this map and join it back onto our initial list of products.

const join = (data, joinData, key) => {

return data.map((item) => {

item.stats = joinData[item[key]];

return item;

});

};

To do this I created a simple join function that takes the initial data, the data that you want to join, and the key required.

One of the products returned from Elasticsearch looks as follows:

{

"_index": "product",

"_type": "product",

"_id": "20",

"_score": 3.750173,

"_source": {

"title": "DANVOUY Womens T Shirt Casual Cotton Short",

"price": 12.99,

"description": "95%Cotton,5%Spandex, Features: Casual, Short Sleeve, Letter Print,V-Neck,Fashion Tees, The fabric is soft and has some stretch., Occasion: Casual/Office/Beach/School/Home/Street. Season: Spring,Summer,Autumn,Winter.",

"category": "women clothing",

"image": "https://fakestoreapi.com/img/61pHAEJ4NML._AC_UX679_.jpg"

}

}

The key we want is _id and we want to use that to look up the values from our map. Shown above. With a call to our join function like so: join(products, rMap, '_id'), we get our product returned but with a new stats property on it containing the purchases and rating.

{

"_index": "product",

"_type": "product",

"_id": "20",

"_score": 3.750173,

"_source": {

"title": "DANVOUY Womens T Shirt Casual Cotton Short",

"price": 12.99,

"description": "95%Cotton,5%Spandex, Features: Casual, Short Sleeve, Letter Print,V-Neck,Fashion Tees, The fabric is soft and has some stretch., Occasion: Casual/Office/Beach/School/Home/Street. Season: Spring,Summer,Autumn,Winter.",

"category": "women clothing",

"image": "https://fakestoreapi.com/img/61pHAEJ4NML._AC_UX679_.jpg"

},

"stats": { "number_purchases": 45, "average_rating": 2.7586206896551726 }

}

Now we have our data in a suitable format to be returned to the front end and used for sorting.

As you can see, there is quite a lot of work involved on the server-side here to get this to work. It only becomes more complex as you add more stats or start to introduce large result sets that require pagination.

Building with Rockset

Let’s look at implementing the same feature set but using Rockset. The front end will stay the same but we have two options when it comes to querying Rockset. We can either continue to use the bespoke API to handle our calls to Rockset (which will probably be the default approach for most applications) or we can get the front end to call Rockset directly using its inbuilt API.

In this post, I’ll focus on calling the Rockset API directly from the front end just to showcase how simple it is. One thing to note is that Elasticsearch also has a native API but we were unable to use it for this activity as we needed to join data together, something we don’t want to be doing on the client-side, hence the need to create a separate API layer.

Search for Products in Rockset

To replicate the effectiveness of the search results we get from Elasticsearch we will have to do a bit of processing on the description and title field in Rockset, fortunately, all of this can be done on the fly when the data is ingested into Rockset.

We simply need to set up a field mapping that will call Rockset’s Tokenize function as the data is ingested, this will create a new field that is an array of words. The Tokenize function takes a string and breaks it up into “tokens” (words) that are then in a better format for search later.

Now our data is ready for searching, we can build a query to perform the search for our term across our new tokenized fields. We’ll be doing this using Vue and Axios again, but this time Axios will be making the call directly to the Rockset API.

search: function() {

var data = JSON.stringify({"sql":{"query":"select * from commons.\"products\" WHERE SEARCH(CONTAINS(title_tokens, '" + this.query + "'),CONTAINS(description_tokens, '" + this.query+"') )OPTION(match_all = false)","parameters":[]}});

var config = {

method: 'post',

url: 'https://api.rs2.usw2.rockset.com/v1/orgs/self/queries',

headers: {

'Authorization': 'ApiKey <API KEY>',

'Content-Type': 'application/json'

},

data : data

};

axios(config)

.then( response => {

this.results = response.data.results;

})

}

The search function has been modified as above to produce a where clause that calls Rockset’s Search function. We call Search and ask it to return any results for either of our Tokenised fields using Contains, the OPTION(match_all = false) tells Rockset that only one of our fields needs to contain our search term. We then pass this statement to the Rockset API and set the results when they are returned so they can be displayed.

Calculating Stats in Rockset

Now we have the same core search functionality, we now want to add the number of purchases and average star rating for each of our products, so it can again be used for sorting our results.

When using Elasticsearch, this required building some server-side functionality into our API to make multiple requests to Elasticsearch and then join all of the results together. With Rockset we simply make an update to the select statement we use when calling the Rockset API. Rockset will take care of the calculations and joins all in one call.

"SELECT

products.*, purchases.number_purchases, reviews.average_rating

FROM

commons.products

LEFT JOIN (select product_id, count(*) as number_purchases

FROM commons.purchases

GROUP BY 1) purchases on products.id = purchases.product_id

LEFT JOIN (select product_id, AVG(CAST(rating as int)) average_rating

FROM commons.reviews

GROUP BY 1) reviews on products.id = reviews.product_id

WHERE" + whereClause

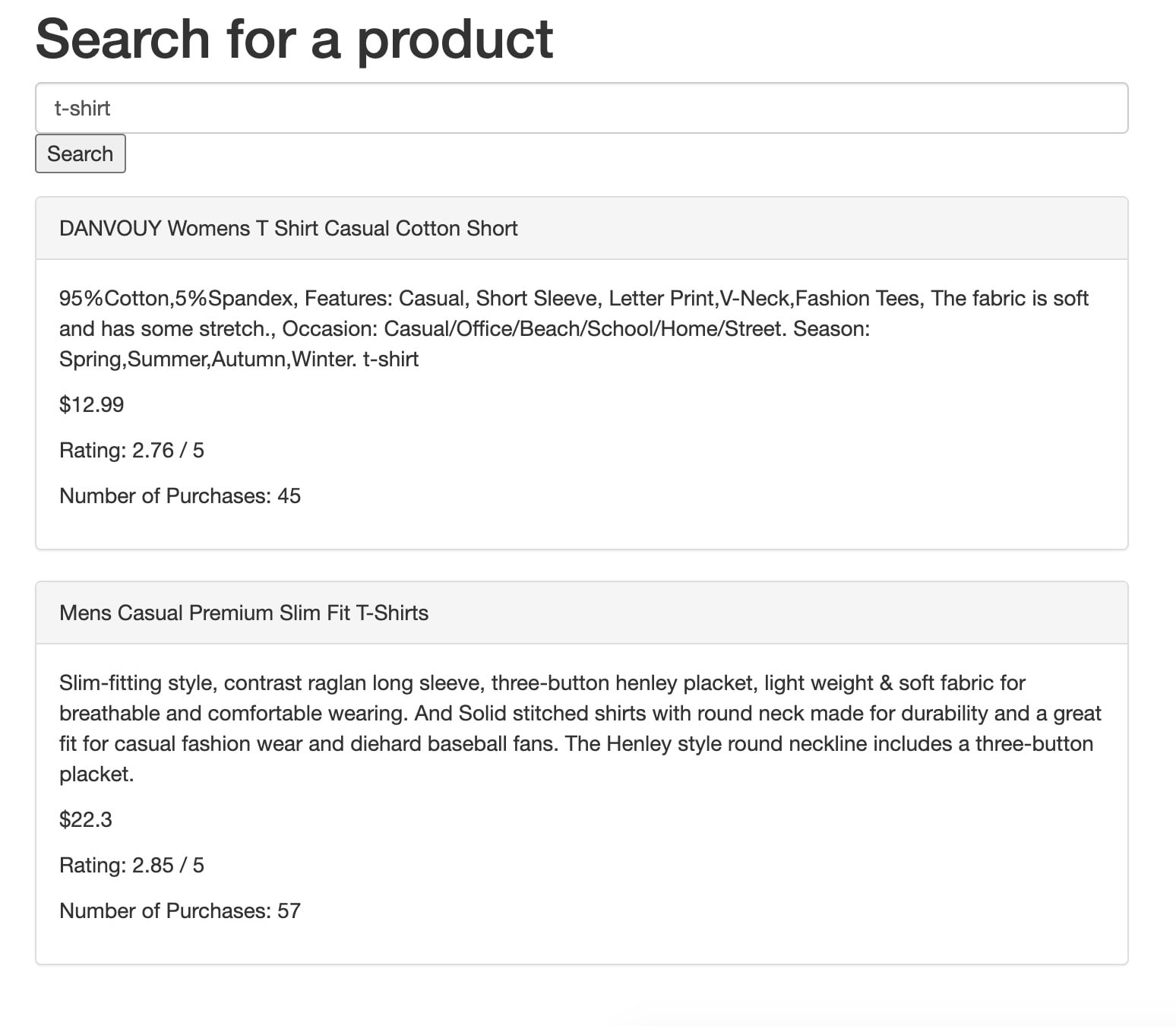

Our select statement is altered to contain two left joins that calculate the number of purchases and the average rating. All of the work is now done natively in Rockset. Fig 3 shows how these can then be displayed on the search results. It’s now a trivial activity to take this further and use these fields to filter and sort the results.

Fig 3. Results showing rating and number of purchases as returned from Rockset

Feature Comparison

Here’s a quick look at where the work is being done by each solution.

| Activity | Where is the work being done? Elasticsearch Solution | Where is the work being done? Rockset Solution |

|---|---|---|

| Search | Elasticsearch | Rockset |

| Calculating Stats | Elasticsearch | Rockset |

| Joining Stats to Search Results | Bespoke API | Rockset |

As you can see it is fairly comparable except for the joining part. For Elasticsearch, we have built bespoke functionality to join the datasets together as it isn’t possible natively. The Rockset approach requires no extra effort as it supports SQL joins. This means Rockset can take care of the end-to-end solution.

Overall we’re making fewer API calls and doing less work outside of the database making for a more elegant and efficient solution.

Conclusion

Although Elasticsearch has been the default data store for search for a very long time, its lack of SQL-like join support makes building some rather trivial applications quite difficult. You may have to manage joins natively within your application meaning more code to write, test, and maintain. An alternative solution may be to denormalize your data when writing to Elasticsearch, but that also comes with its own issues, such as amplifying the amount of storage needed and requiring additional engineering overhead.

By using Rockset, we may have to Tokenize our search fields on ingestion however we make up for it in firstly, the simplicity of processing this data on ingestion as well as easier querying, joining, and aggregating data. Rockset’s powerful integrations with existing data storage solutions like S3, MongoDB, and Kafka also mean that any further data required to supplement your solution can quickly be ingested and kept up to date. Read more about how Rockset compares to Elasticsearch and explore how to migrate to Rockset.

When selecting a database for your real-time analytics use case, it is important to consider how much query flexibility you would have should you need to join data now or in the future. This becomes increasingly relevant when your queries may change frequently, when new features need to be implemented or when new data sources are introduced. To experience how Rockset provides full-featured SQL queries on complex, semi-structured data, you can get started with a free Rockset account.

Lewis Gavin has been a data engineer for five years and has also been blogging about skills within the Data community for four years on a personal blog and Medium. During his computer science degree, he worked for the Airbus Helicopter team in Munich enhancing simulator software for military helicopters. He then went on to work for Capgemini where he helped the UK government move into the world of Big Data. He is currently using this experience to help transform the data landscape at easyfundraising.org.uk, an online charity cashback site, where he is helping to shape their data warehousing and reporting capability from the ground up.