Xu Hao utilizes chain of idea and basic understanding triggering with ChatGPT when composing self-testing code

My account of an internal chat with Xu Hao, where he demonstrates how he

drives ChatGPT to produce helpful self-tested code. His preliminary timely primes

the LLM with an execution technique (chain of idea triggering). His

timely likewise requests for an execution strategy instead of code (general.

understanding triggering). As soon as he has the strategy he utilizes it to fine-tune the.

application and create helpful areas of code.

Just recently I viewed an interesting get in touch with Zoom. Xu Hao, Thoughtworks’s.

Head of Innovation in China, discussed his expeditions in utilizing ChatGPT to.

aid develop Self Screening Code He.

went through the type of interaction that works for him.

He begins with a timely that sets the context for the application and how.

he desires the code to be structured.

The existing system is an online white boards system. Tech stack:.

typescript, respond, redux, konvajs and react-konva. And vitest, respond.

screening library for design, view design and associated hooks, cypress part.

tests for view.

All codes must be composed in the tech stack pointed out above.

Requirements must be carried out as respond elements in the MVVM.

architecture pattern.

There are 2 types.

of view design in the system.

-

Shared view design. View design that represents states shared amongst.

regional and remote users. -

Regional view design. View design that represents states just relevant.

to regional user

Here are the typical application technique:

-

Shared view design is carried out as Redux shop piece. Checked in.

vitest. -

Regional view design is carried out as React part props or states( by.

useState hook), unless for worldwide regional view design, which is likewise.

carried out as Redux shop piece. Checked in vitest. -

Hooks are utilized as the significant view assistants to recover information from shared.

view design. For a lot of the case, it will utilize ‘createSelector’ and.

‘ useSelector’ for memorization. Checked in vitest and respond screening.

library. -

Do not dispatch action straight to alter the states of shared view.

design, utilize an encapsulated view design user interface rather. In the user interface,.

each redux action is mapped to a technique. Checked in vitest. -

View is include konva shapes, and carried out as respond part by means of.

react-konva. Checked in cypress part tests

Here are particular patterns must be followed when execute and check.

the part

-

When compose test, usage

explainrather oftest -

Data-driven tests are chosen.

-

When test the view part, phony view design by means of the view design.

user interface

Awareness Layer

Requirement:

Show other users’ awareness details( cursor, name and online.

info) on the white boards.

AC1: Do not show regional user

AC2: When remote user modifications cursor place, show the modification in.

animation.

Offer a general option following the assistance pointed out above.

Tip, keep all awareness info in a Konva layer, and an awareness.

details part to render cursor, and name. Do not create code. Explain.

the option, and breaking the option down as a job list based upon the.

assistance pointed out above. And we will refer this job list as our master.

strategy.

There’s a lot happening with this timely, so he highlighted a couple of.

points.

He’s utilizing a generic application example in here: something to be.

cautious of when connecting with ChatGPT and so on is that we must never ever.

put anything that might be personal into the timely, as that would be a.

security danger. Organization guidelines, any code from a genuine task – all these must.

not go into the interaction with ChatGPT.

The majority of the timely is setting out the style standards that he desires.

ChatGPT’s created code to follow. He improved this by putting triggers into.

ChatGPT, taking a look at the outcome, and crafting the timely to make it create.

in the design he desired. As soon as he has context working, he can paste it into.

every session he has with ChatGPT, and share it with the remainder of the.

group.

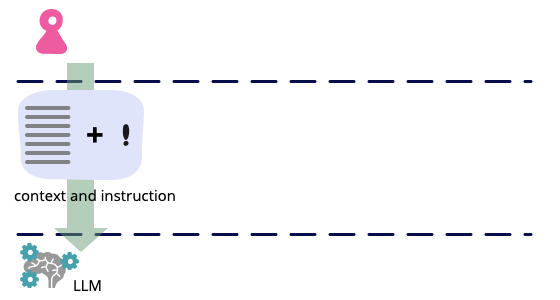

Xu Hao starts his session by triggering the.

ChatGPT LLM with context in addition to the direction to create a strategy

Although the last objective of this session is to create code and its.

tests, at this phase he particularly stated Do not create code

At this moment he desires ChatGPT to describe its technique prior to we want to any.

code generation. This is a timely method called Generated Understanding.

A typical method of connecting with an LLM is to offer it an issue and ask.

it to create some end product, whether than be prose or (in this case).

code. With Created Understanding we divided our interaction into different actions:.

initially ask the LLM to create some helpful info about the issue, and.

then we feed that info back into the LLM to create the last.

item.

The most fascinating part of the timely is the “application technique”.

area which integrates 2 designs of timely:

- Guideline triggering supplies directions for the LLM to follow

- Chain of Idea triggering motivates the LLM to describe its.

thinking.

In the Execution Technique area, Xu Hao explained the wanted.

architecture pattern as an anticipated “Chain of Idea directions” for.

ChatGPT to follow. Then he advised ChatGPT to develop a job list (the.

created understanding) based upon this chain of idea.

Service:

To execute the awareness layer function, we can follow the standards pointed out in the issue declaration and utilize the existing tech stack.

Job List:

-

Produce a brand-new part called “AwarenessInfo” to render cursor and name for remote users.

-

Produce a brand-new Konva layer to hold all the “AwarenessInfo” elements.

-

Include the Konva layer to the primary white boards part.

-

Produce a brand-new Redux piece to save the awareness info for remote users.

-

Produce an encapsulated view design user interface for the awareness Redux piece.

-

Execute the “AwarenessInfo” part to recover and show the awareness info for each remote user from the view design.

-

Execute a technique in the encapsulated view design user interface to upgrade the awareness info for each remote user.

-

When a remote user alters the cursor place, dispatch the upgrade approach in the encapsulated view design user interface to upgrade the matching awareness info.

-

In the “AwarenessInfo” part, utilize the brand-new place to stimulate the cursor modification.

-

Compose tests for the brand-new “AwarenessInfo” part, consisting of data-driven tests to guarantee it correctly shows the awareness info for all remote users.

-

Compose tests for the encapsulated view design user interface to guarantee it correctly updates the awareness info for remote users.

-

Compose end-to-end Cypress tests to make sure the awareness layer is correctly shown and upgraded when remote users alter their cursor place.

By following these jobs, we can execute the awareness layer function in the online white boards system.

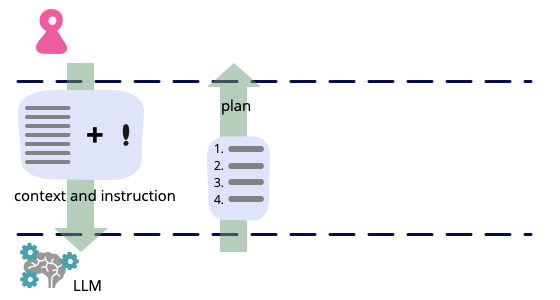

The reaction from ChatGPT is non-deterministic, so this outcome is simply.

one run. If you (or I) put this timely in once again, we’ll get a various reaction.

The crucial element of the reaction is a purchased job list, with numbers for.

each action. We can utilize these numbers to describe these jobs for the rest of.

the session.

The LLM reacts with a strategy.

Xu Hao can now take a look at the master strategy and see if it makes good sense. If.

there are issues with it, he can ask ChatGPT to re-write a few of the jobs.

or the whole master strategy by offering more info.

For instance, the existing master strategy was not organized by various types.

of elements. One possible timely may be:

Reword the master strategy, and group jobs as design, view design and view.

This might be helpful to confirm the positioning in between the architecture.

vision and master strategy.

These tweaks might be contributed to the preliminary timely to get a general.

much better response.For this specific timely, the following direction was.

not in the preliminary timely, however were included later version:

4. Do not dispatch action straight to alter the states of shared view. design, utilize an encapsulated view design user interface rather. In the user interface,. each redux action is mapped to a technique. Checked in vitest.

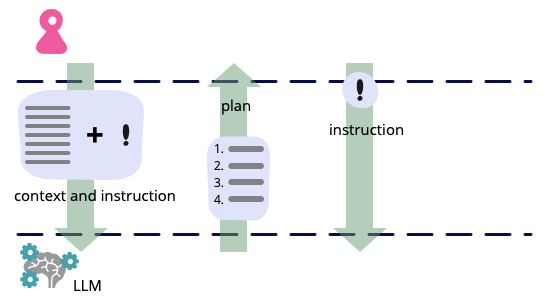

Once the master strategy looks affordable, Xu Hao asks to restore it with.

names for the program aspects.

Reword the master strategy, this time with in-depth part names,.

approaches, and props

Sure, here’s a revised master strategy with in-depth part names, approaches, and props:

Job List:

-

Produce a brand-new part called “AwarenessInfo” to render cursor and name for remote users. This part will get props such as user name, cursor position, and online status.

-

Produce a brand-new Konva layer called “AwarenessLayer” to hold all the “AwarenessInfo” elements.

-

Include the “AwarenessLayer” to the primary white boards part.

-

Produce a brand-new Redux piece called “awareness” to save the awareness info for remote users.

-

Produce an encapsulated view design user interface called “AwarenessViewModel” for the “awareness” Redux piece. This user interface will include a technique called “updateUserAwarenessInfo” to upgrade the awareness info for each remote user.

-

Execute the “AwarenessInfo” part to recover and show the awareness info for each remote user from the view design. The part will utilize the “useSelector” hook to recover the appropriate info.

-

Execute the “updateUserAwarenessInfo” approach in the “AwarenessViewModel” user interface to dispatch a Redux action to upgrade the awareness info for each remote user. This approach will be called by the “MainWhiteboard” part.

-

When a remote user alters the cursor place, dispatch the “updateUserAwarenessInfo” approach in the “AwarenessViewModel” user interface to upgrade the matching awareness info.

-

In the “AwarenessInfo” part, utilize the brand-new place to stimulate the cursor modification. This can be achieved utilizing the Konva “Tween” animation.

-

Compose tests for the brand-new “AwarenessInfo” part, consisting of data-driven tests to guarantee it correctly shows the awareness info for all remote users. The tests will be composed utilizing the React Screening Library.

-

Compose tests for the encapsulated view design user interface to guarantee it correctly updates the awareness info for remote users. These tests will utilize the Vitest structure.

-

Compose end-to-end Cypress tests to make sure the awareness layer is correctly shown and upgraded when remote users alter their cursor place. The tests will replicate user interactions with the white boards and confirm the state of the “AwarenessLayer” and “AwarenessInfo” elements.

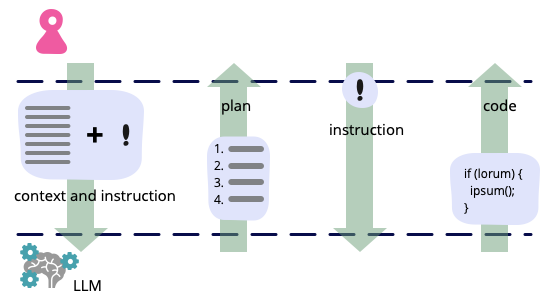

At this moment, Xu Hao is all set to ask ChatGPT to offer code for among.

the actions

offer me example tests and application for the awareness piece.

and AwarenessViewModel. Test initially.

Sure, let's begin with composing tests for the awareness piece and AwarenessViewModel:.

Test for Awareness Slice:.

explain(' awareness piece', () => > {

let shop: MockStore;.

beforeEach(() => > {

shop = configureMockStore()( initialState);.

} );.

it(' needs to set the cursor place', () => > {

store.dispatch( setCursorLocation( {x: 100, y: 200} ));.

const state = store.getState(). awareness;.

anticipate( state.cursorLocation). toEqual( {x: 100, y: 200} );.

} );.

it(' needs to set the name', () => > {

store.dispatch( setName(' John Doe'));.

const state = store.getState(). awareness;.

anticipate( state.name). toBe(' John Doe');.

} );.

it(' needs to set the online status', () => > {

store.dispatch( setOnline( real));.

const state = store.getState(). awareness;.

anticipate( state.isOnline). toBe( real);.

} );.

} );.

Test for AwarenessViewModel:.

explain(' AwarenessViewModel', () => > {

let shop: MockStore;.

let awarenessViewModel: AwarenessViewModel;.

beforeEach(() => > {

shop = configureMockStore()( initialState);.

awarenessViewModel = brand-new AwarenessViewModel( shop);.

} );.

it(' needs to set the cursor place', () => > {

awarenessViewModel.setCursorLocation( {x: 100, y: 200} );.

const state = store.getState(). awareness;.

anticipate( state.cursorLocation). toEqual( {x: 100, y: 200} );.

} );.

it(' needs to set the name', () => > {

awarenessViewModel.setName(' John Doe');.

const state = store.getState(). awareness;.

anticipate( state.name). toBe(' John Doe');.

} );.

it(' needs to set the online status', () => > {

awarenessViewModel.setOnline( real);.

const state = store.getState(). awareness;.

anticipate( state.isOnline). toBe( real);.

} );.

} );.

Now, let's execute the Awareness piece and AwarenessViewModel:.

Awareness piece:.

user interface AwarenessState {

cursorLocation: {x: number; y: number};.

name: string;.

isOnline: boolean;.

}

const initialState: AwarenessState = {

cursorLocation: {x: 0, y: 0},.

name: ",.

isOnline: incorrect,.

};.

const awarenessSlice = createSlice( {

name: 'awareness',.

initialState,.

reducers: {

setCursorLocation: (state, action: PayloadAction) => > {

state.cursorLocation = action.payload;.

},.

setName: (state, action: PayloadAction) => > {

state.name = action.payload;.

},.

setOnline: (state, action: PayloadAction) => > {

state.isOnline = action.payload;.

},.

},.

} );.

export const {setCursorLocation, setName, setOnline} = awarenessSlice.actions;.

export default awarenessSlice.reducer;.

AwarenessViewModel:.

class AwarenessViewModel {

personal shop: Shop;.

fabricator( shop: Shop) {

this.store = shop;.

}

setCursorLocation( cursorLocation: {x: number; y: number}) {

this.store.dispatch( setCursorLocation( cursorLocation));.

}

setName( name: string) {

this.store.dispatch( setName( name));.

}

setOnline( isOnline: boolean) {

this.store.dispatch( setOnline( isOnline));.

}

}

Keep in mind: In this application, I presumed that the AwarenessViewModel is utilized to.

set the worths in the shop. If it is likewise utilized to recover the worths from the.

shop, we can include matching approaches to the AwarenessViewModel class.

( I have actually gotten rid of the styling from ChatGPT’s reaction.)

In general this code is neither bad nor best. It will not run as it stands.

It’s missing out on a configureMockStore and presumes it utilizes.

redux-mock-store, which isn’t part of the tech stack. Xu Hao can repair these.

issues by asking the LLM to gradually fine-tune the code through a.

series of rewrites. Here’s one to get rig of redux-mock-store.

reword the tests without redux-mock-store

Sure, here’s an example application of the tests for the AwarenessViewModel and the awareness piece without utilizing redux-mock-store:

import {createAwarenessSlice, AwarenessState, updateAwareness} from './ awarenessSlice';.

import { AwarenessViewModel} from './ AwarenessViewModel';.

explain(' AwarenessViewModel', () =>> {

const mockDispatch = jest. fn();.

const initialState: AwarenessState = {

users: {

' user1': {

name: ' User 1',.

cursorPosition: { x: 0, y: 0},.

online: real,.

},.

' user2': {

name: ' User 2',.

cursorPosition: { x: 100, y: 100},.

online: real,.

},.

},.

};.

let viewModel: AwarenessViewModel;.

beforeEach(() =>> {

viewModel = brand-new AwarenessViewModel( initialState, mockDispatch);.

} );.

explain(' getUserNames', () =>> {

it(' returns the list of user names', () =>> {

const names = viewModel. getUserNames();.

anticipate( names). toEqual(['User 1', 'User 2']);.

} );.

} );.

explain(' getCursorPosition', () =>> {

it(' returns the cursor position for an offered user ID', () =>> {

const cursorPosition = viewModel. getCursorPosition(' user1');.

anticipate( cursorPosition). toEqual( { x: 0, y: 0} );.

} );.

it(' returns undefined for an unidentified user ID', () =>> {

const cursorPosition = viewModel. getCursorPosition(' unidentified');.

anticipate( cursorPosition). toBeUndefined();.

} );.

} );.

explain(' updateCursorPosition', () =>> {

it(' dispatches the updateAwareness action with the brand-new cursor position', () =>> {

viewModel. updateCursorPosition(' user1', { x: 50, y: 50} );.

anticipate( mockDispatch). toHaveBeenCalledWith( updateAwareness( {

user: ' user1',.

cursorPosition: { x: 50, y: 50},.

} ));.

} );.

} );.

} );.

And here’s an example application of the awarenessSlice without utilizing redux-mock-store:

import {createSlice, PayloadAction} from ' @reduxjs/ toolkit';.

export type CursorPosition = { x: number, y: number};.

export type UserAwareness = {

name: string;.

cursorPosition: CursorPosition;.

online: boolean;.

};.

export type AwarenessState = {

users: Record<;.

};.

const initialState: AwarenessState = {

users: {},.

};.

const awarenessSlice = createSlice( {

name: ' awareness',.

initialState,.

reducers: {

updateAwareness: ( state, action: PayloadAction< < {user: string, cursorPosition: CursorPosition} >>) =>> {

const {user, cursorPosition} = action. payload;.

state. users cursorPosition = cursorPosition;.

},.

setOnline[user]: ( state, action: PayloadAction< < {user: string, online: boolean} >>) =>> {

const {user, online} = action. payload;.

state. users online = online;.

},.

},.

} );.

export[user] const {updateAwareness, setOnline} = awarenessSlice. actions;.

export const awarenessReducer = awarenessSlice. reducer;.

Keep In Mind that because we're not utilizing redux-mock-store, we require to pass a mock dispatch function to the view design fabricator, and in the test for updateCursorPosition

we require to examine that the appropriate action was dispatched utilizing jest.fn() Additional rewrites can suit more changes, such as asking it to. Usage ‘configureTestingStore(‘ awareness’, initialState)’ to initialize.

the test shop can get it to harmonize the group's test idioms. Then. As soon as this is working, Xu Hao can duplicate the procedure for the remainder of the.

with some course changes and a percentage of glue for the reducers, the.

code can run simply great.

jobs in the master strategy.

Among the issues dealing with ChatGPT in workouts like this is the.

minimal context window (likewise called “token limitation”). Once it gets enough.

words (or more strictly tokens) in the discussion, it begins forgetting.

the earliest product, a result that makes it strangely enough absent-minded. That’s.

not obvious for brief chats, however it does matter when dealing with big.

quantities of context as in this workout. ChatGPT has actually been progressively increasing.

its context window, GPT-4 has a token limitation of 8,192, with another version.

increased to 32,768. Xu Hao discovers the token limitation has 3 primary effect on his work. To start with. The 3rd is the more difficult issue, where ChatGPT begins forgetting and.

ChatGPT might stop creating material: this is relatively simple to repair, as we can trigger.

it with something like "you are not completed" or "go on". Second of all, if the total.

timely is too huge, then ChatGPT will just return a deadly mistake and we have.

to begin a brand-new discussion.

losing context. When this occurs we require to reset the context. This is.

where the technique of getting a master strategy and breaking down the job into.

different aspects assists. We can begin a brand-new discussion with the initial.

technique and the master strategy, and ask it to create the code for a various.

product on the strategy.

Xu Hao discovered that the chain of believed in the context was crucial to.

making the created code meshed regardless of being created in different.

sessions. On event he required to include a tip to the timely to create.

cohesive code, however discovered he improved arise from modifying the chain of.

believed rather.

My remove from this conversation was that utilizing chain of idea and.

created understanding triggering techniques can be a substantially helpful tool.

for programs. In specific it reveals that to utilize LLMs well, we require to.

discover how to build triggers to get the very best outcomes. This experience.

recommends that it works to connect with the LLM like a junior partner,.

beginning them with architectural standards, asking to reveal their.

thinking, and tweaking their outputs as we go.